Easy Stable Diffusion + Textual Inversion!

Wow – The pace at which the AI Image generation space has changed is something I have seldom seen in my life as a nerd. It reminds me of early Bitcoin tools or the Apple app store when it seemed like every day there was some new amazing app/tool/offering.

I think SD is even more engaging for the community than these since it’s both open source and produces something tangible and fun (looking at blockchain ledgers takes some imagination to see why it’s ‘cool’)

I personally had so much fun hacking on the original CompVis repo and building my own workflows. Even after a good amount of work though, the way I had been using Stable Diffusion wasn’t really sufficient for anything other than playing around or running tests on prompt syntax.

If I wanted to use SD as an artist and bring images to life based on my creative vision, I was missing a UI, simple integration between txt2img/img2img/face restoration/upscaling/etc, and so much more I didn’t even realize. Thankfully, a group of people much smarter than me have already got together and built all this out.

You can find the Automatic1111/stable-diffusion-webui repository here

This tool really lets you peek into the power of what these kinds of tools will do for artists in the very near future. The repository is updated frequently with new features or tools too – below we’ll look at setting up textual inversion.

Install

Installation is so simple and covered so well in the repository, I won’t add anything here. After installing the right version of Python, simply clicking the .bat file will install everything you need except the SD ckpt model. The model download links are also located in the repo.

Extras

I’d recommend installing GPFGAN and ESRGAN as described here. This will let you fix faces and easily upscale images. CodeFormer is also included but will be downloaded when you attempt to use it.

Making it Public

If you’re reading this, the likelihood you’ve been playing with AI image generators and SD for the past weeks/months is high. If you’ve been running SD on your machine, you almost certainly have wanted to show your friends too – Kudos to you if you exposed your machine publicly and walked people through entering terminal commands (I guess :P) – Now, thankfully, we have an easier way.

If you want to launch stable-diffusion-webui via a public URL in addition to your localhost url, you can make a simple edit to webui.bat:

Find the line that starts with launch: and change it to the following:

:launch

echo Launching webui.py...

%PYTHON% webui.py --share %COMMANDLINE_ARGS%

pause

exit /b

Now, when you launch webui-user.bat, a public URL will appear in the terminal you can share with others

Textual Inversion

Textual Inversion is a very new concept in AI Image Generation/Stable Diffusion and allows you to create concepts and pass them to the model via embeddings. This means you can create databases of images that represent different styles or concepts and use them in your image generation prompts.

The Automatic1111 repo lets us do this today! Let’s set it up.

You can read more about textual inversion here

Setup

Per the repository, we need to create an embeddings folder in the repositories root folder.

Once we do this, we need to get some embeddings. This will almost certainly change as I write these words, but currently, there is a repository of user submitted embeddings available through HuggingFace/sd-concepts-library.

Find some embeddings you like here. Download the learned_embeddings.bin file in the repository:

From my brief research, a lot of these look to be comprised of only a few images. If you look for larger bin files, you can semi-easily see how large an embedding’s dataset is. I found larger datasets produced better results in the few tests I ran.

Rename the learned_embeds.bin file to name.pt where name is the term you want to use in your prompts to refer to the embedding.

You must not have folders or files other than the .pt embedding files in the embeddings folder or you will see errors in terminal.

If everything worked correctly, when you run a txt2img prompt with the embedded term, you will see “used custom term” in your detail output.

Final Thoughts

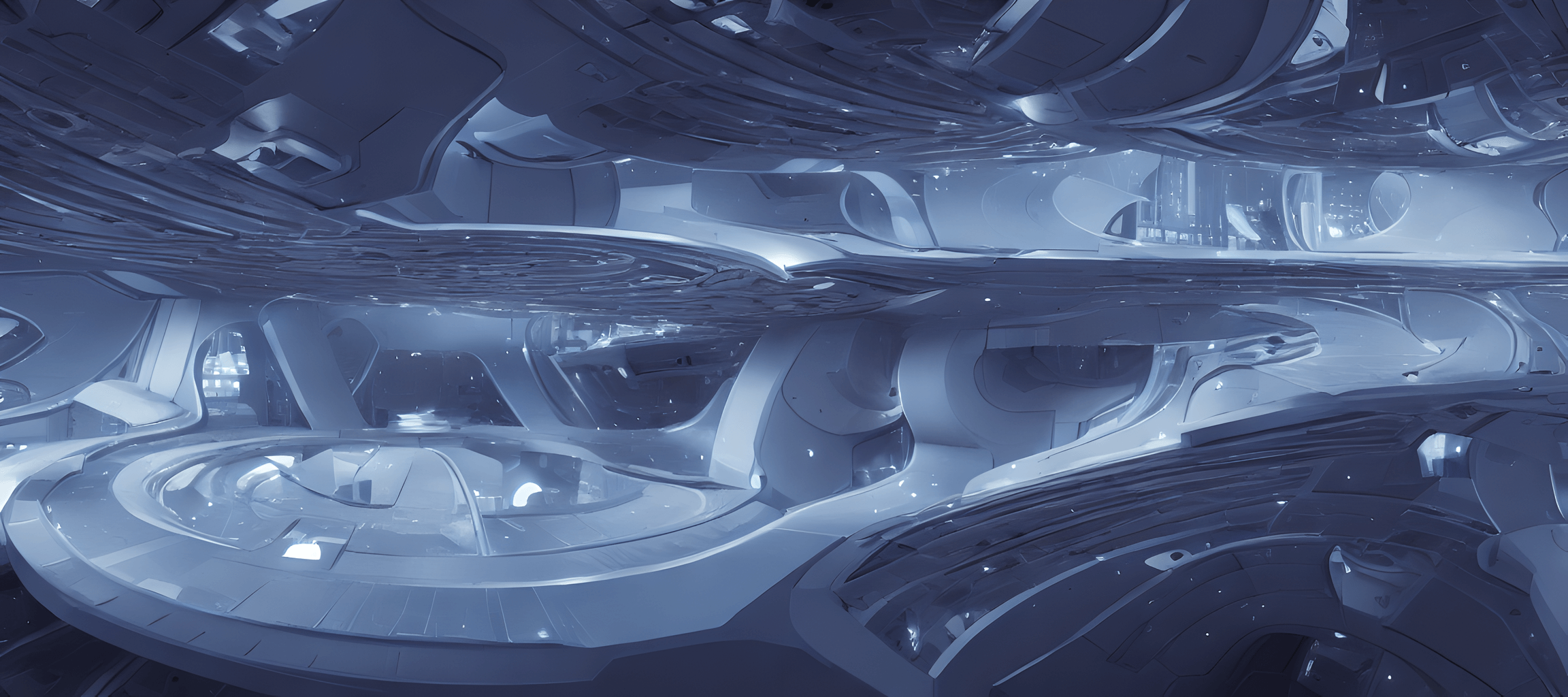

Very excited to see what new features come out in the near future. I have been playing with the tiling settings I didn’t have access to in the CompVis repository with some really cool results, which I’ll share later this week. Here’s a sneak peek of some of the final results:

|  |

|---|

I’m also very keen to generate my own embeddings and do some more testing to better understand how that works and can be used.

That’s all for now – Enjoy!